Apple continues to expand Siri’s capabilities, developing the digital assistant with context-aware technology that could redefine user interactions. As part of the larger Apple Intelligence feature set, Siri is on track to become more interactive and intuitive, learning to better understand onscreen content and user context. To prepare, Apple is equipping developers with App Intent APIs, allowing them to design apps that work seamlessly with the future of Siri. Here’s an in-depth look at these upcoming features, how developers are preparing, and what users can expect as Siri transforms into a more responsive, AI-powered assistant.

Apple’s App Intent APIs: A Foundation for Siri’s Future

In its latest beta versions, Apple has introduced App Intent APIs that enable developers to integrate their apps with Siri’s enhanced capabilities. These APIs are key to Apple’s goal of making Siri more contextually aware and responsive. Here’s how they’re shaping the future of Apple Intelligence:

- Enabling Onscreen Content Awareness: With App Intent APIs, Siri and Apple Intelligence will soon be able to interpret content directly from apps. For instance, users could view a webpage or document and ask Siri to summarize or answer questions about it.

- Improved User Interactivity: By providing developers with these APIs, Apple enables a more seamless experience, allowing Siri to interact with onscreen elements intuitively.

- Preparation for Full Integration: Apple is releasing these tools in advance so that developers have several months to prepare, ensuring apps are Siri-ready when these features roll out fully to the public.

For more details, see our Developer Guide to Apple’s App Intent APIs.

Advanced Onscreen Awareness: Siri’s Ability to Process Onscreen Content

The most anticipated feature in Siri’s update roadmap is onscreen awareness—Siri’s upcoming ability to understand and act on content directly displayed on a user’s screen. Here’s how onscreen awareness is expected to work:

Key Functions of Siri’s Onscreen Awareness

- Real-Time Content Interaction: Siri will be able to retrieve data from documents, emails, and messages, allowing users to quickly complete actions based on what’s displayed. For example, if someone shares an address, Siri could add it to a contact’s details automatically.

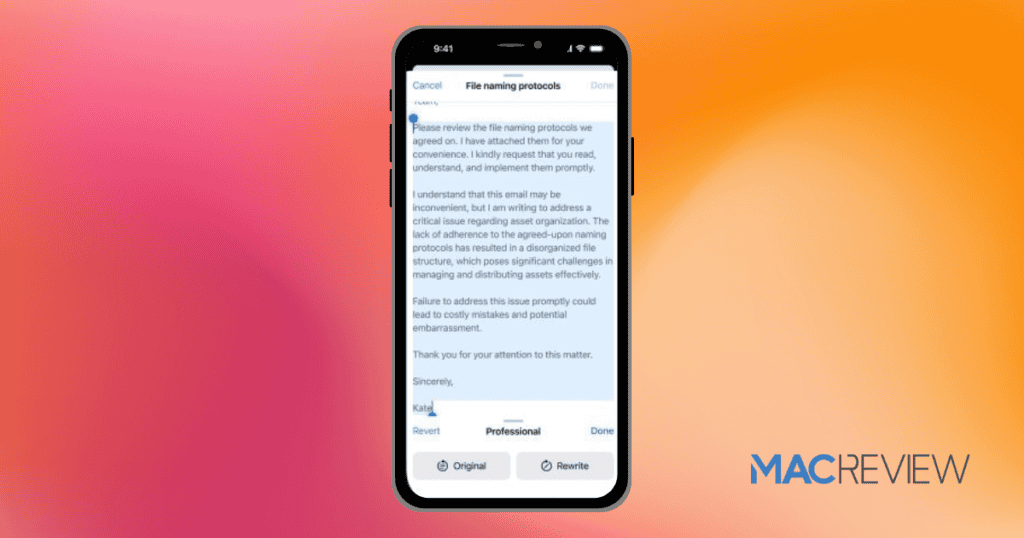

- Enhanced Visual Recognition: Siri’s integration with ChatGPT adds powerful visual recognition. Users can ask Siri about the content of images or documents—such as a PDF—and Siri will take a screenshot and ask ChatGPT for an analysis. This capability provides insight into image content and document summaries.

- Natural Language Commands: Users can ask Siri to summarize or interpret onscreen information, enhancing productivity without requiring users to switch between multiple apps.

While ChatGPT integration is currently limited to assessing screenshots, the development sets the stage for Siri’s full onscreen awareness, expected in iOS 18.4 in 2025. For updates, read more on our Siri and ChatGPT Integration FAQ.

ChatGPT Integration: A New Layer of Intelligence for Siri

With the release of iOS 18.2, Apple has integrated ChatGPT with Siri for specific tasks involving photos and documents. Here’s how this collaboration works and why it’s significant:

- Image Analysis: Users can now ask Siri questions like “What’s in this photo?” Siri will capture the screen, relay it to ChatGPT, and provide a descriptive response, which could be useful for identifying unknown items or scenes.

- Document Insights: This feature allows Siri to analyze documents—like PDFs or presentations—providing a summary or key points. This is especially useful for students and professionals who need quick information on complex documents.

While not yet the complete onscreen awareness feature Apple envisions, this integration is a significant leap towards making Siri a truly interactive assistant.

Expected Timeline: iOS 18.4 and the Next Generation of Siri

Apple is expected to release full onscreen awareness in iOS 18.4, projected for spring 2025. According to Bloomberg’s Mark Gurman, this update will bring Siri’s enhanced capabilities to life. Here’s what we know about the timeline and Apple’s rollout plan:

- Current Availability: iOS 18.2 introduces limited ChatGPT integration with Siri.

- Developer Preparation: Developers have access to App Intent APIs now, allowing them to prepare for Siri’s onscreen awareness and other advanced features.

- Full Feature Rollout: The complete onscreen awareness, personal context, and in-app actions for Siri are likely to debut with iOS 18.4, aligning with Apple’s broader AI development timeline.

For more details on Apple’s upcoming releases, visit our iOS 18.4 Update Tracker.

Onscreen Awareness vs. ChatGPT Integration: Key Differences

Apple’s onscreen awareness and ChatGPT integration may seem similar, but they offer distinct functionalities. Here’s a breakdown of each feature:

| Feature | Function | Example |

|---|---|---|

| ChatGPT Integration | Assesses screenshots to answer questions about images or documents | Ask Siri, “What’s in this photo?” |

| Onscreen Awareness | Siri directly interacts with onscreen elements and performs actions | Say, “Add this address to their contact card” after receiving a message |

These differences highlight how Apple is layering Siri’s intelligence, using ChatGPT for specific tasks while planning a broader onscreen awareness capability that allows Siri to take actions based on context.

How Developers Can Prepare for Siri’s Expanded Capabilities

Apple’s early release of App Intent APIs gives developers the tools they need to integrate apps with Siri’s upcoming features. Here’s how developers can leverage these APIs:

- Content Recognition: By using the new API, developers can make their app content recognizable to Siri, allowing users to ask questions about in-app elements.

- Enhanced User Commands: App Intent APIs enable developers to provide Siri with specific actions that users can activate through voice commands, creating a more intuitive experience.

- Collaborative Innovation: Apple encourages developers to start incorporating these APIs, so Siri can seamlessly interact with a broader range of apps once iOS 18.4 is released.

For a step-by-step guide, read our Developer’s Guide to Siri’s App Intent APIs.

The Future of Siri: Key Features to Anticipate

Looking ahead, Siri’s advanced capabilities, including onscreen awareness, represent a significant evolution for Apple’s digital assistant. Here’s what users can expect:

- Personal Context: Siri will soon be able to understand user preferences and context more deeply, allowing for more personalized assistance.

- Expanded In-App Actions: Siri’s ability to execute tasks within apps will increase, simplifying workflows and reducing time spent navigating multiple apps.

- Seamless Cross-Platform Integration: Apple’s goal is for Siri to offer the same intuitive interactions across all Apple devices, including iPhone, iPad, and Mac.

Apple’s roadmap suggests a future where Siri seamlessly integrates with onscreen content, personalized user information, and app-specific actions, transforming it into an indispensable digital assistant.

MacReview Verdict: Preparing for the Next Generation of Siri

Apple’s investment in Siri’s evolution, with tools like App Intent APIs and ChatGPT integration, reflects its commitment to creating a more intelligent, contextually aware assistant. As we await the full rollout in iOS 18.4, developers can start preparing apps for this advanced functionality, while users can look forward to a Siri that interacts seamlessly with their onscreen content and personal context.