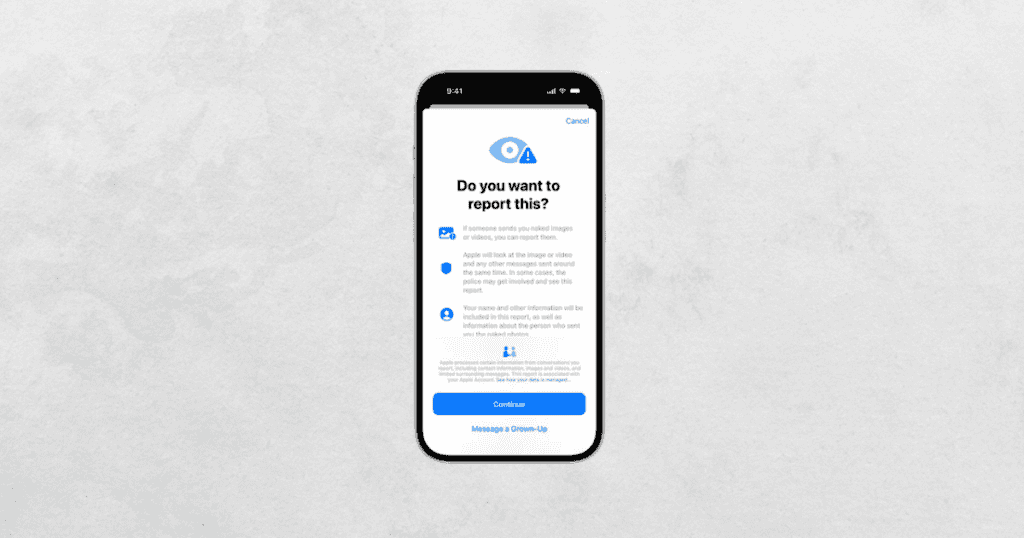

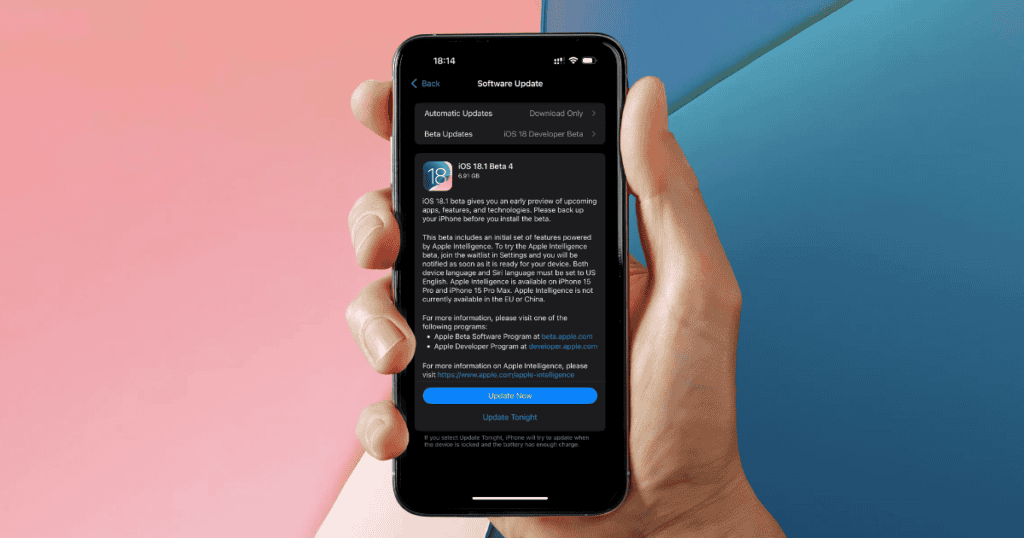

With the release of iOS 18.2, Apple introduces a powerful new tool designed to enhance the safety of younger users: a feature that allows children to report nudity in iMessages. This development marks a significant step in protecting children from inappropriate content, empowering them to take charge of their online interactions while preserving privacy through on-device processing. The broader implications of this innovation extend into areas like digital safety norms and parental guidance, offering a unique balance between privacy, user control, and security.

A New Standard for Child Safety in iMessages

The introduction of this reporting feature addresses a growing need for child-friendly safety tools in digital communication platforms. By allowing children to report nudity directly, Apple positions itself as a leader in online protection for minors. The feature operates by detecting explicit images and videos using on-device intelligence. This means that content is analyzed locally on the child’s device, ensuring both privacy and quick intervention.

Here’s how the new reporting feature stands out:

- Immediate Nudity Detection: As soon as explicit content is detected, the image or video is blurred, and the child is presented with options to report the content, contact an adult, or seek help.

- Child-Friendly Interface: The feature is designed to be easy for children to navigate, ensuring they feel empowered to report inappropriate material without confusion.

- Comprehensive Reporting: When a report is made, not only is the inappropriate content sent to Apple, but so are surrounding messages and contact details, ensuring thorough reviews and appropriate action.

By fostering a more proactive role for children in managing their digital safety, this feature sets a new precedent for how tech companies can protect younger users.

How the Reporting System Works

The iOS 18.2 feature operates across multiple platforms, including iMessages, FaceTime, AirDrop, and Photos. The system uses advanced technology to detect nudity in incoming messages or media. Upon detection, the user is presented with several options:

- Report the Content: Users can send the flagged image or video, along with contextual messages and contact information, directly to Apple for review.

- Message a Trusted Adult: The system also allows children to notify a parent or guardian immediately, creating a direct line of support.

- Seek Help: In cases where more guidance is needed, children can access help resources provided by Apple, ensuring they are never left without assistance.

Apple’s review process includes:

- Content Evaluation: Apple reviews the submitted report, analyzing both the flagged image and its accompanying context to determine the appropriate action.

- Possible Actions: Offenders may face messaging restrictions, and in extreme cases, law enforcement may be contacted if necessary.

By maintaining control over the process at the device level, Apple strikes a careful balance between user privacy and comprehensive protection.

Benefits of the Nudity Reporting Feature

This new feature in iOS 18.2 provides a range of benefits that extend beyond immediate protection. These include:

- Empowering Young Users: The ability to report explicit content themselves gives children control over their digital experiences, fostering confidence in navigating the online world.

- Proactive Safety Measures: Automatic nudity detection ensures that harmful content is blocked before it can be viewed, reducing the risk of exposure.

- Privacy-Centered Technology: The use of on-device intelligence protects children’s privacy, ensuring content is never sent off-device unless a report is made.

- Support for Guardians: The feature provides parents and guardians with tools to monitor and assist in managing digital safety, strengthening communication between children and adults.

Steps in the Reporting Process

Here’s a simplified outline of how the reporting feature functions:

- Detection: When an explicit image or video is detected, it is automatically blurred.

- User Prompt: The child is given options to report the content, notify an adult, or seek further help.

- Comprehensive Report: If the child chooses to report, the image, surrounding messages, and contact information are sent to Apple.

- Apple’s Review: Apple’s team evaluates the report and determines the next steps, such as disabling the sender’s messaging capabilities or contacting authorities if necessary.

This clear, structured process makes it easy for children to respond to inappropriate content swiftly and effectively.

Impact on Digital Safety Norms

The introduction of this feature not only addresses the immediate need for protecting children from explicit content but also influences the broader landscape of digital safety. The ability for young users to take action against inappropriate content represents a shift toward more proactive measures in online security.

As the digital environment continues to evolve, features like these are likely to become a cornerstone of child protection efforts. Apple’s commitment to on-device intelligence ensures that safety features do not compromise user privacy, a key concern in today’s tech-driven world.

Moreover, the feature encourages a dialogue between children and their guardians, reinforcing the importance of communication in navigating the challenges of online interactions. By integrating safety tools with support resources, Apple fosters an environment where both children and parents can feel confident about their digital interactions.

Looking Ahead: Future Developments in Child Safety

The rollout of this feature in Australia is just the beginning, with plans for global implementation in the near future. As Apple continues to refine its approach to digital safety, we can expect several key developments:

- Broader Integration Across Platforms: The success of this feature in iMessages may lead to similar protections being added to other apps and services, further enhancing online safety.

- Refinement Through Feedback: Apple will likely use feedback from users, parents, and child protection organizations to fine-tune the feature, ensuring it remains as effective as possible.

- Education and Resources: The continued development of educational resources for parents and children will play a crucial role in maximizing the impact of this tool, helping users understand the importance of digital safety and reporting inappropriate content.

A Safer Digital Future for Children

The new nudity reporting feature in iOS 18.2 represents a critical advancement in protecting children in the digital landscape. By combining on-device intelligence with user-friendly reporting tools, Apple empowers young users to take control of their online interactions while ensuring privacy remains a priority. This innovative feature not only addresses the immediate challenges of explicit content but also paves the way for more comprehensive safety measures in the future, setting a new standard for child protection in the tech industry.

As this feature rolls out globally, its impact on digital communication norms and safety practices is expected to grow, making Apple’s approach a model for safeguarding younger users in an increasingly connected world.